Abstract

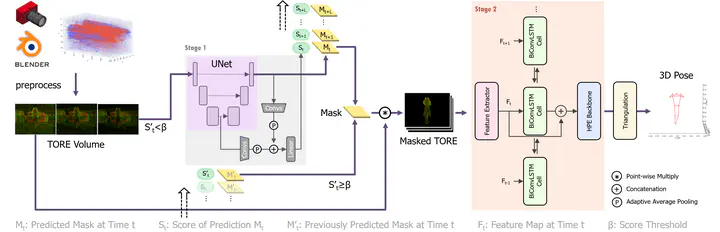

Technology-mediated dance experiences, as a medium of entertainment, are a key element in both traditional and virtual reality-based gaming platforms. These platforms predominantly depend on unobtrusive and continuous human pose estimation as a means of capturing input. Current solutions primarily employ RGB or RGB-Depth cameras for dance gaming applications; however, the former is hindered by low-light conditions due to motion blur and reduced sensitivity, while the latter exhibits excessive power consumption, diminished frame rates, and restricted operational distance. Boasting ultra-low latency, energy efficiency, and a wide dynamic range, neuromorphic cameras present a viable solution to surmount these limitations. Here, we introduce YeLan, a neuromorphic camera-driven, three-dimensional, high-frequency human pose estimation (HPE) system capable of withstanding low-light environments and dynamic backgrounds. We have compiled the first-ever neuromorphic camera dance HPE dataset and devised a fully adaptable motion-to-event, physics-conscious simulator. YeLan surpasses baseline models under strenuous conditions and exhibits resilience against varying clothing types, background motion, viewing angles, occlusions, and lighting fluctuations.